Inspiration

What started out as a class project and then another ended up becoming a project I spent a large amount of time on during the spring semester and early summer of 2020. The original assignment was to develop a Java program to determine the current state of the game (whether someone had won, the game was tied, or it was still ongoing).

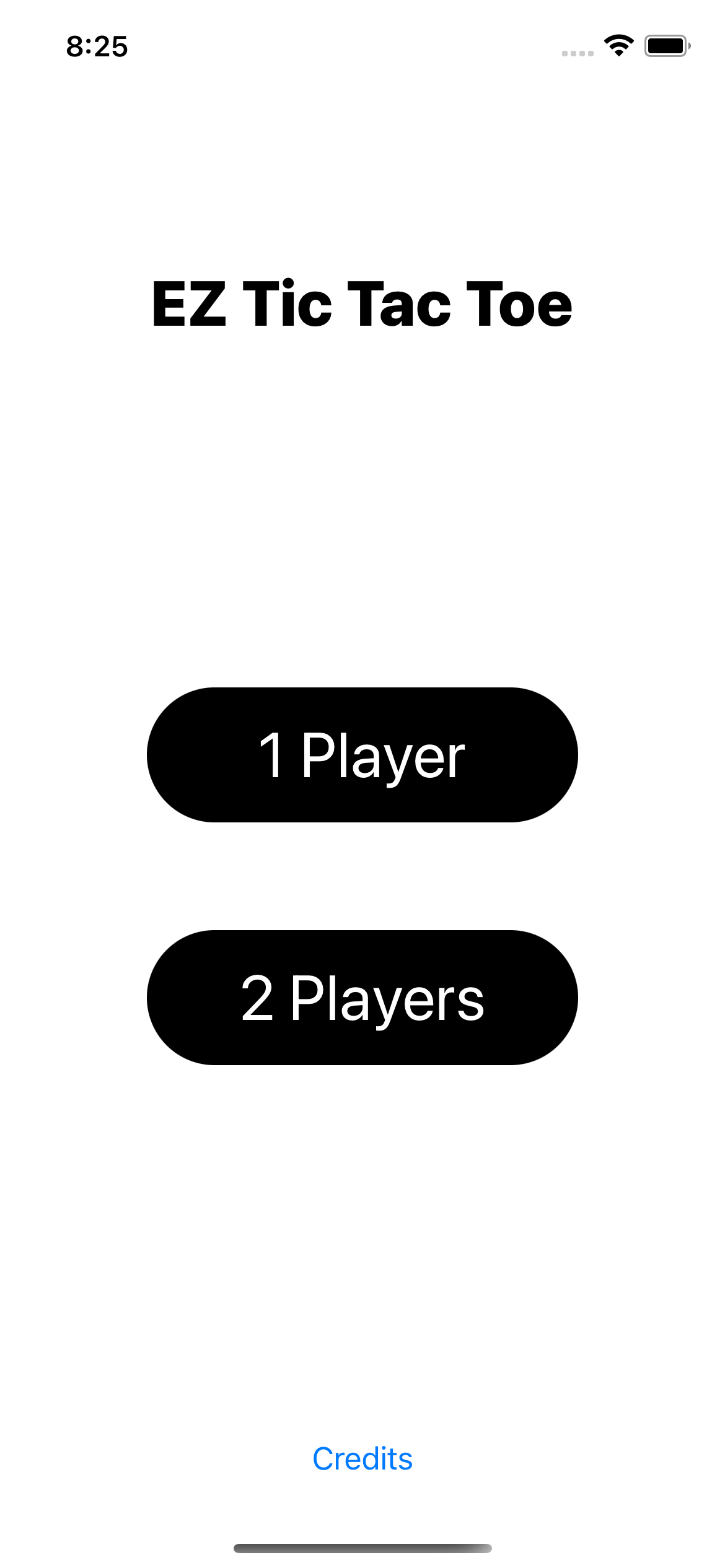

Being fond of Swift, wanting to build out an app, and having a project I knew I could build, I did what anyone in my situation would do and spent an inordinate amount of time to develop a relatively simple app. My goal: to build a Tic Tac Toe app that had a clean design and was well tested using the skills I was developing in the class that had inspired the project.

Features/Outcomes

-

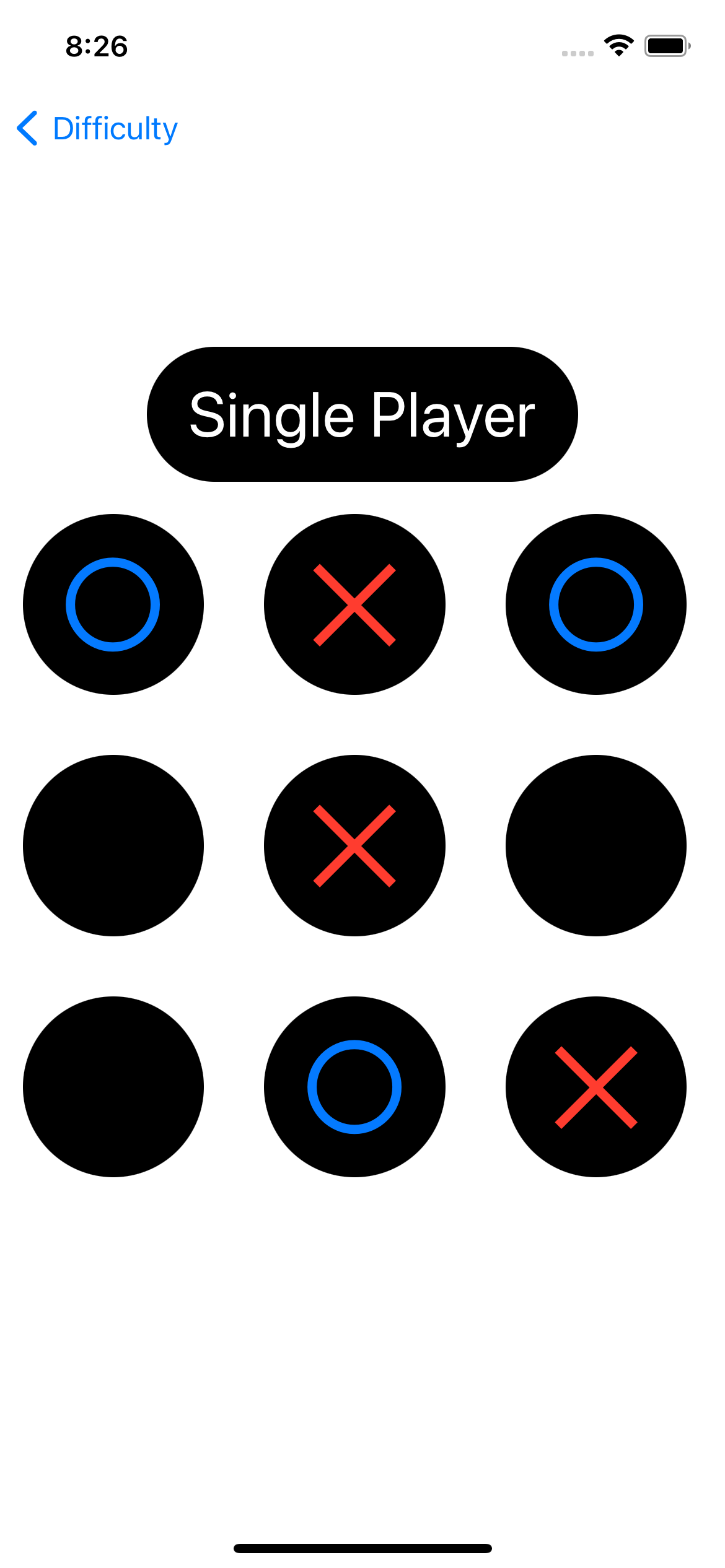

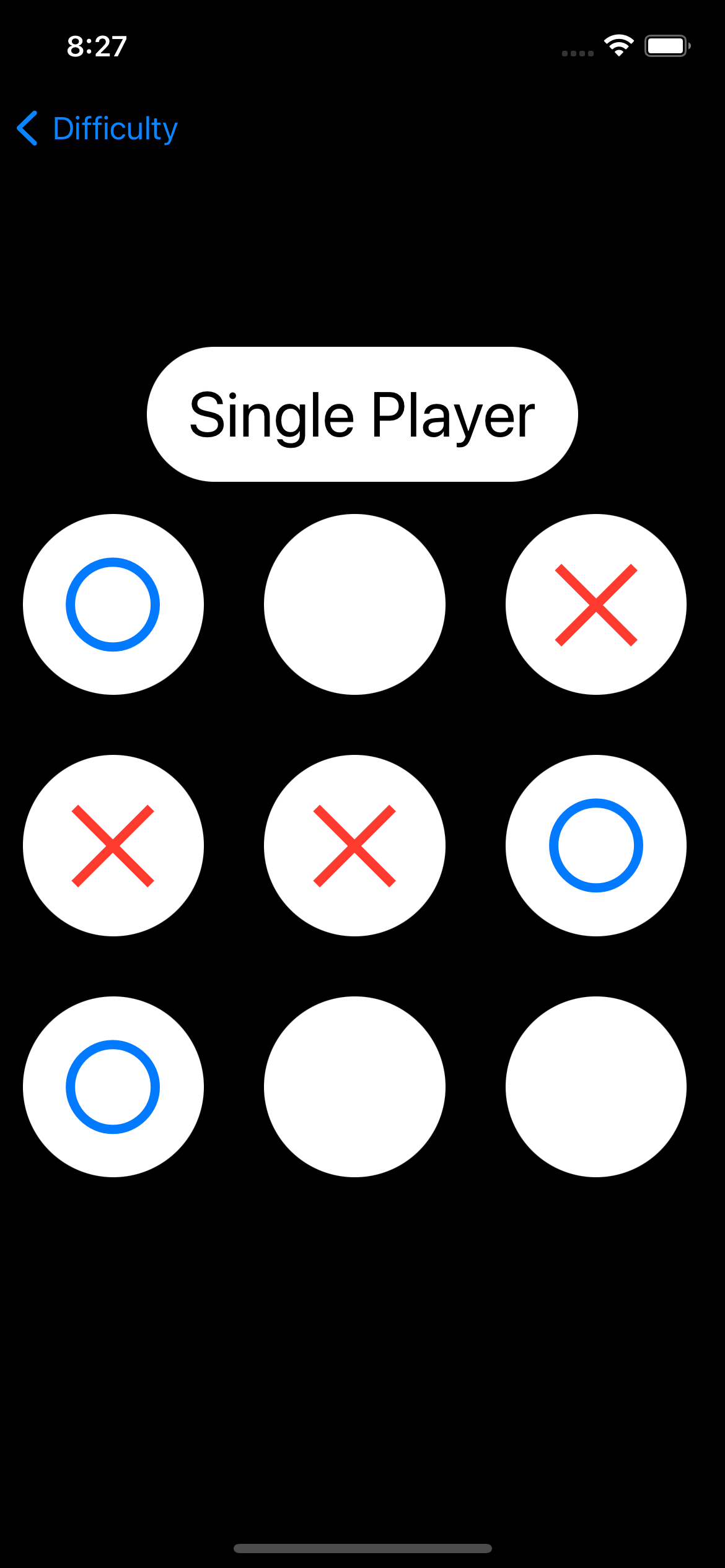

Full singleplayer mode

- Computer uses minimax algorithm to determine computer's next best move

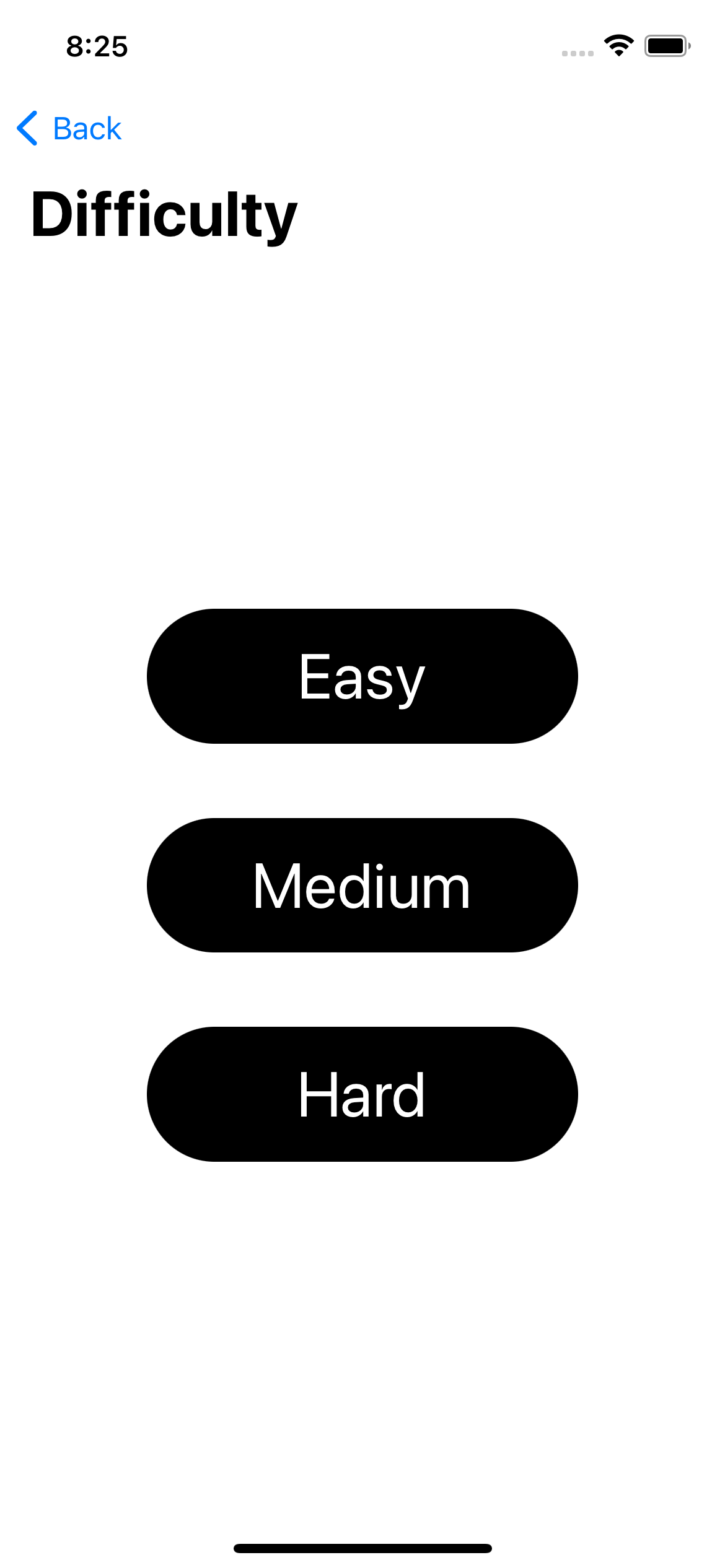

- User-selectable difficulty level that allows for the player to win

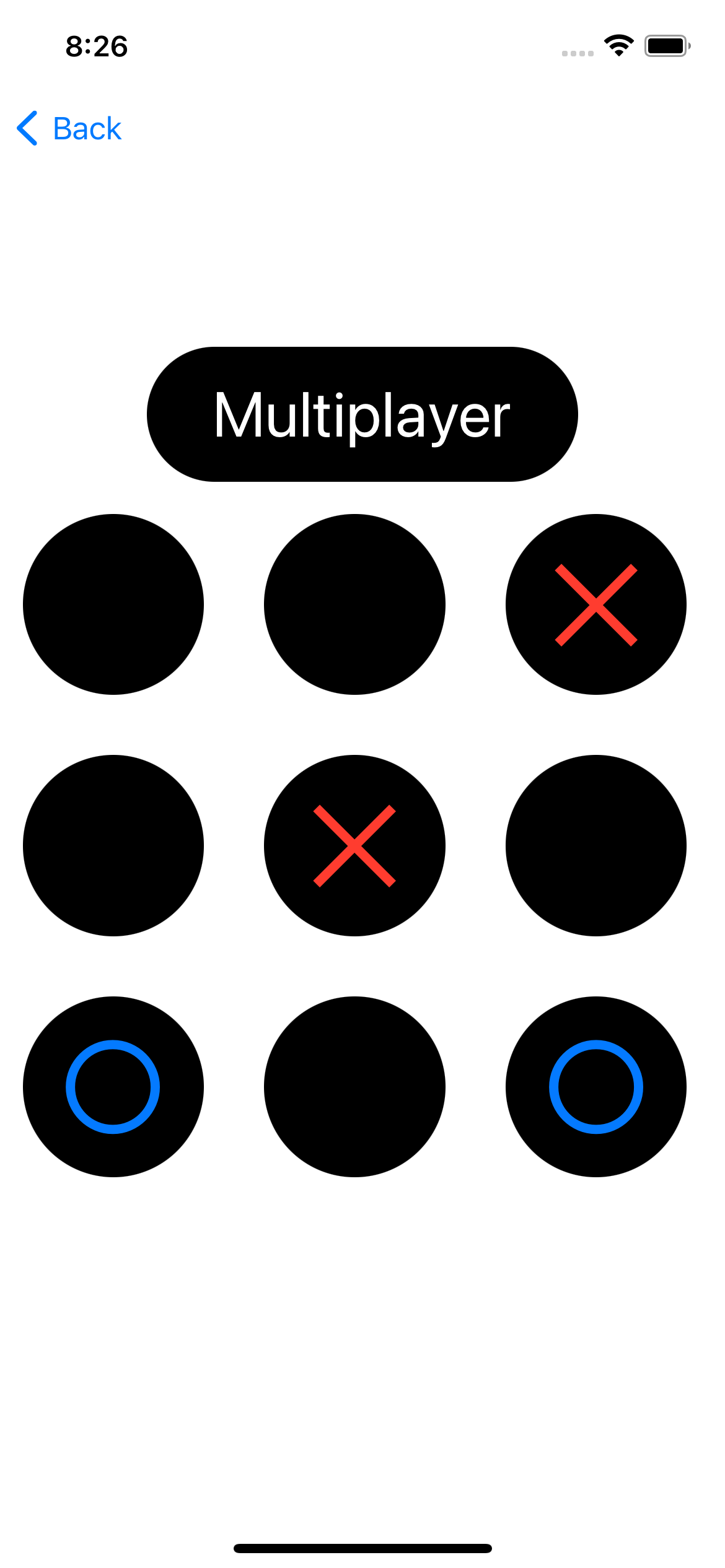

- Local multiplayer mode

- 80.2% code coverage with unit tests and UI tests

- Continuous integration workflow built using GitHub Actions

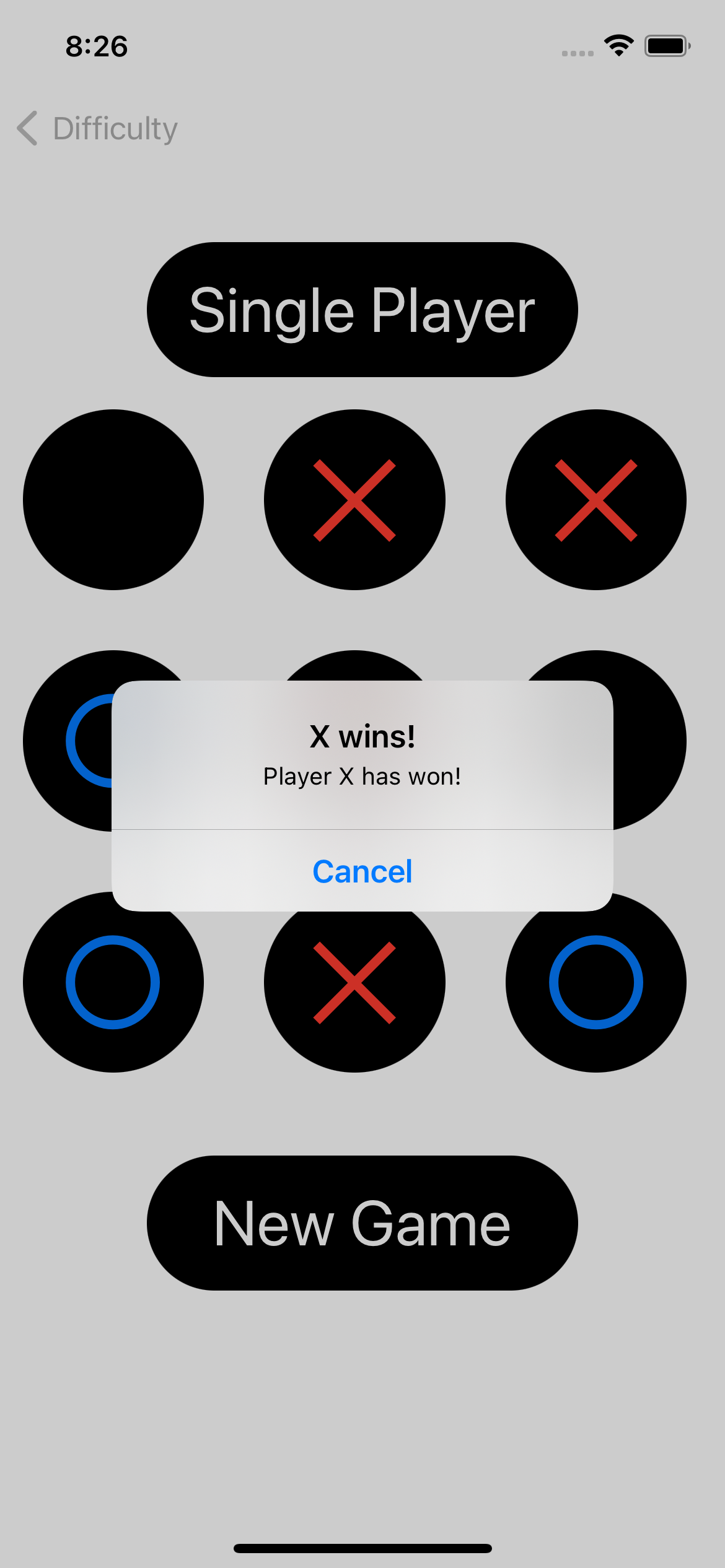

- Clean UI with both light and dark mode support

Screenshots

Technical Details

While my minimax implementation can be intimidating, I hope to break it down a little further here. For starters, here's the function header and documentation comments:

The eagle-eyed among you may have noticed this function is for a class called GameState, but also takes in a GameState as an argument. The reason I did this was so that I could make the function static, and therefore could minimize the likelihood of side-effects occuring by trying to calculate the best move. One major downside of this approach is that it's slower than if I were to do the modifications in-place and keep track of changes. However, I found that in the real world the performance benefits were not worth the risks of programmer error causing issues.

Before we go any further, the rest of the explanation assumes knowledge of the minimax algorithm. For an explanation, here's a video on the topic.

The function implementation has 3 steps:

- Evaluate the current board (base case)

- Determine which player's turn it is

- Recursively search for the best solution

Step 1:

Before we start trying to play new moves, we need to check if the game is already over. For this, I wrote a set of if statements that evaluates whether or not the board was a good outcome. To do this, I check the board's state. If a player won and the player is equal to the player the algorithm is optimizing for, I return a positive number. If it's equal to the other player, I return a negative number. In the event of a tie, I return 0.

One final issue to solve is prioritizing moves that are good now over moves that are good later. This was something that took me a while to grasp. Before I implemented the depth system, the algorithm would choose moves that were nonsensical. This was because it was equating moves in the current to moves in the future. As such, a move that allowed for two wins in the future while keeping the opponent's winning opportunities equal was favored over a move to win the game. In order to solve this, I added depths to prioritize the moves that win the game over moves that don't. Even more importantly, when the AI is in a losing situation, it won't give up and pick a random move but will instead pick the move the draws out the game the longest.

Step 2:

This part is much more straight-forward than the other two. All I do here is pick the corresponding piece to play based on whether I'm on a turn that's minimizing or a turn that's maximizing. There's probably a better way to do this, but I prefer the simple way instead as I'm able to visually determine what's going on very easily.

Step 3:

So this part's where things get a little difficult to explain without going line-by-line. In general, what I'm doing is setting the value from step 2 in every available tile and recursively stepping through the game to determine what the outcome would be if both players made the best possible move. Then, I use whichever move provides the best possible outcome of the moves available.

Lines 3-5 are pretty self-explanatory as they simply iterate over all the empty cells. After that, I make a copy of the board so I don't mess up the current board's data (6-7). On lines 9 & 10, I set the cell I'm testing to the new value. On line 12 is the recursive step, and I find out how the game will likely end if I were to play that move. Then, I compare the move's value against the current value stored (if there is one yet) and pick the best one. Finally, I return the best-case scenario for any moves that could be made with that given state.